Proxy Cache

Overview

The Proxy Cache Policy is designed to improve API performance and reduce latency by caching API responses. This allows frequently requested data to be served quickly without querying the backend repeatedly. By implementing caching, the system can optimize resource usage and improve response times for clients.

Setting an optimal TTL value prevents excessive cache invalidation while ensuring updated data is served when needed.

Configuration Details

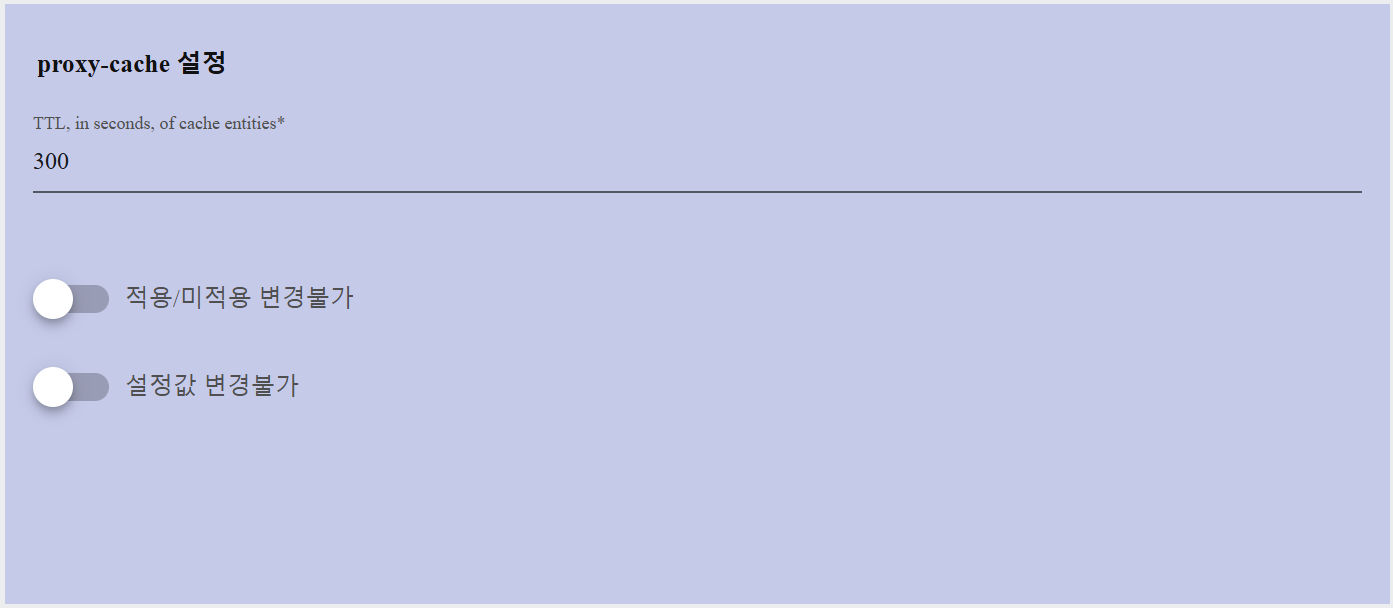

The Proxy Cache Policy includes essential settings to control how responses are cached and served.

| Field | Description | Example Values | Notes |

|---|---|---|---|

| TTL (Time-To-Live) | Defines the lifespan of cached responses (in seconds). | 300 | After this duration, cached responses expire and require re-fetching. |

Note:

- Shorter TTL values ensure more frequent updates from the backend, while longer TTL values enhance performance by reducing backend queries.

- Once the TTL expires, cached responses are removed, and the next request triggers a fresh response from the backend.

- Developers should balance between freshness and performance when configuring cache duration.