Application Auto Scaling Settings

Application Auto Scaling Settings

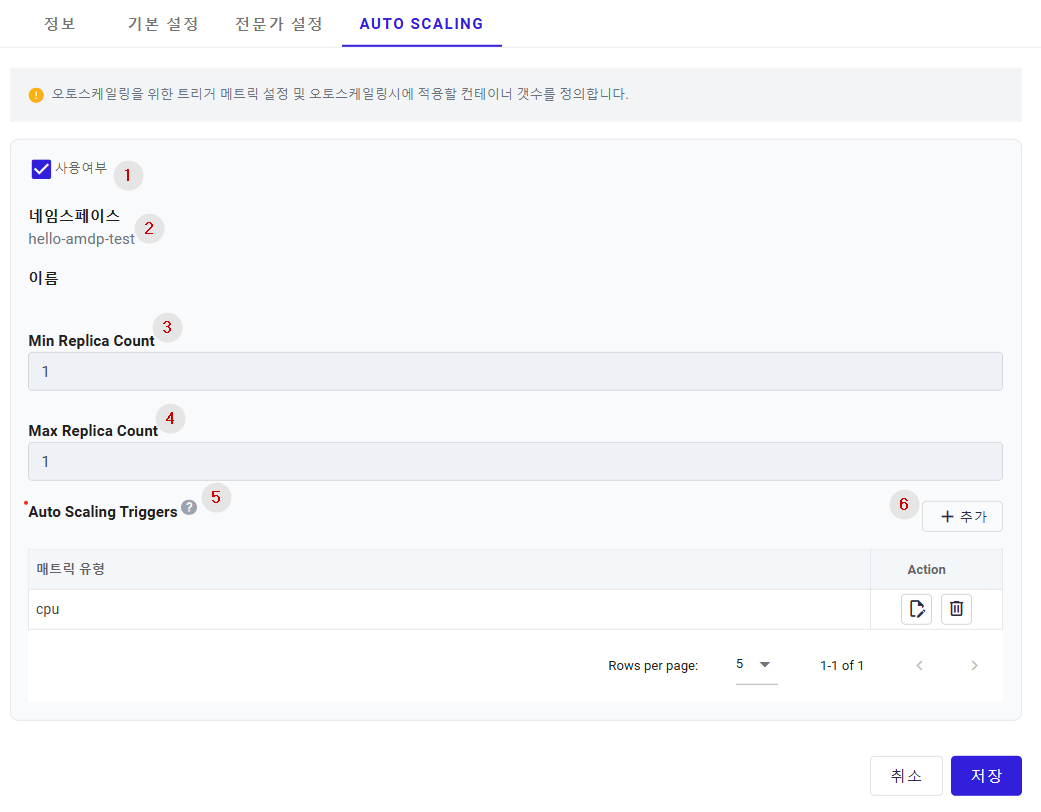

Auto Scaling settings support horizontal service expansion based on the characteristics of the application's services. Based on the set information, the cluster automatically controls the number of pods according to the specified metric types and the Min/Max Replica Count.

Detailed Auto Scaling Settings

① Usage: Select whether to use the Auto Scaling property. Activating this setting applies the related configurations.

② Namespace: Automatically provides information set to the same value as the namespace specified by the application.

③ Min Replica Count: Sets the minimum number of pod replicas to maintain for the application. Even if the platform calculates a number of pods lower than this value based on the specified metrics, this value is maintained as the minimum range.

④ Max Replica Count: Sets the maximum number of pod replicas to maintain for the application. Even if the platform calculates a number of pods higher than this value based on the specified metrics, this value is maintained as the maximum range.

⑤ Auto Scaling Triggers: (Mandatory) You can add up to five types of metrics that are configurable in AMDP. Each type cannot be duplicated but can be set in combination with other types.

- Metric types: cpu, memory, kubernetes-workload, kafka, prometheus.

- Action: Provides modification and deletion features for each type.

⑥ The Add button allows you to add a new metric type. Already set types are not provided in the list.

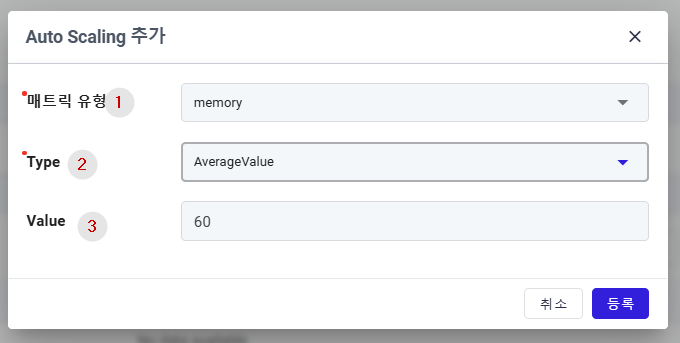

Auto Scaling [CPU / Memory] Type Settings

To use CPU and Memory usage (quantity) as indicators for Auto Scaling in the application, use these metric types.

① Metric Type: (Mandatory) The metric type for Auto Scaling.

② Type: (Mandatory) Choose one of two sub-types for CPU and Memory: Utilization: Compares as a percentage against the average metric values of all pods of the application. AverageValue: Applies the numerical average metric values of all pods of the application.

③ Value: (Mandatory) The baseline value for CPU or Memory to trigger Auto Scaling.

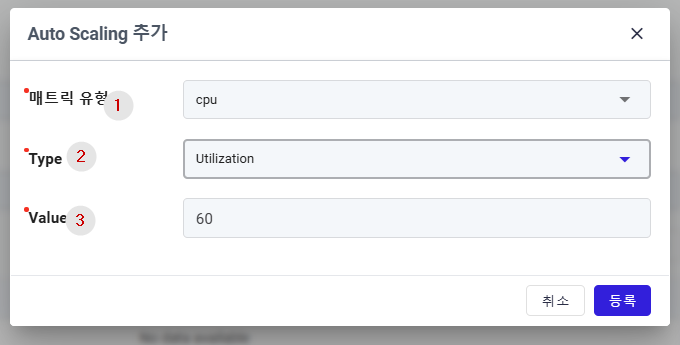

Auto Scaling CPU-workload Type Settings

To use CPU and Memory usage (quantity) as indicators for Auto Scaling in the application, use these metric types.

① Metric Type: (Mandatory) The metric type for Auto Scaling.

② Type: (Mandatory) Choose one of two sub-types for CPU and Memory:

- Utilization: Compares as a percentage against the average metric values of all pods of the application.

- AverageValue: Applies the numerical average metric values of all pods of the application.

③ Value: (Mandatory) The baseline value for CPU or Memory to trigger Auto Scaling.

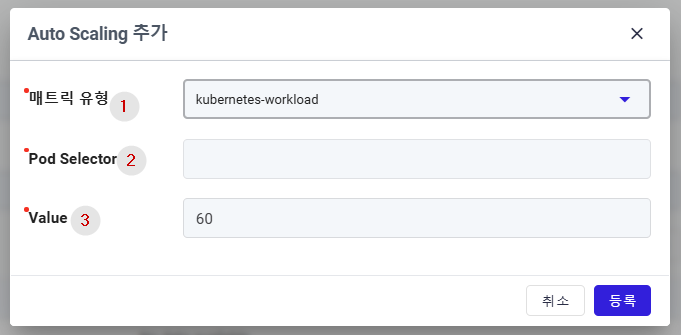

Auto Scaling kubernetes-workload Type Settings

This setting is used when expanding based on the pod quantity of other related applications.

① Metric Type: (Mandatory) The metric type for Auto Scaling.

② Pod Selector: (Mandatory) Enter the Pod Selector information to select the related application

Labels for applications deployed in AMDP are registered as amdp.io/app= application name. For example, for the hello-backend application, the registered label is amdp.io/app=hello-backend .

③ Value: The pod count for the application specified by the Pod Selector to be used as a metric.

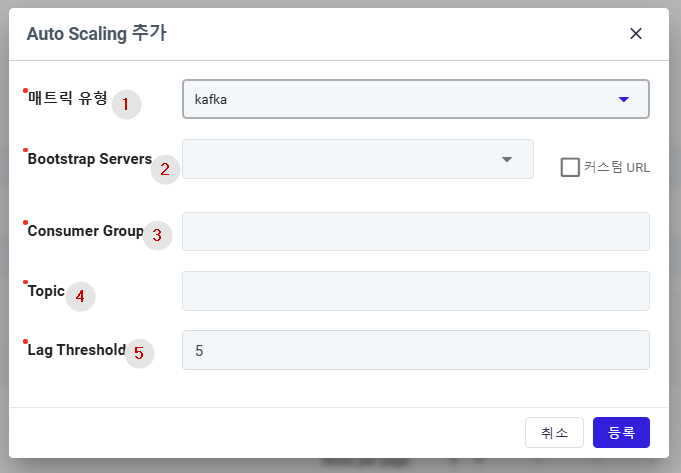

Auto Scaling kafka Type Settings

Use this setting if the application is configured to scale based on Apache Kafka topics or services providing Kafka protocol.

① Metric Type: (Mandatory) The metric type for Auto Scaling.

② Bootstrap Servers: Set the Kafka bootstrap server information as hostname: port, separable by commas. If registered in the profile's Backing Service, it is provided as a selectable list.

③ Consumer Group: Set the name of the Kafka consumer group.

④ Topic: Set the name of the Kafka topic.

⑤ Lag Threshold: Set the metric level for lag in the Kafka Consumer Group. The default value is 5.

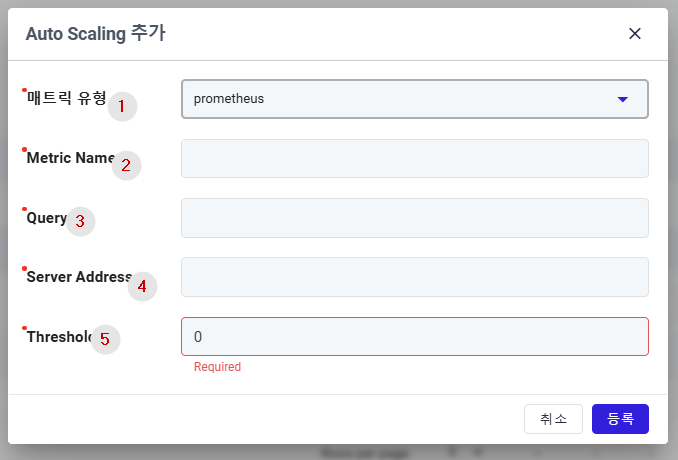

Auto Scaling prometheus Type Settings

Set Auto Scaling based on metrics collected by the monitoring service Prometheus.

① Metric Type: (Mandatory) The metric type for Auto Scaling.

② Metric Name: Enter the Prometheus metric information. This setting will be integrated into Query in the future.

③ Query: Enter the query information based on PromQL.

④ Server Address: Enter the full access information for Prometheus.

⑤ Threshold: Set the baseline value for the data generated by the Query