API Gateway Resource Adjustment Method

This guide introduces three major methods to fine-tune API Gateway performance by adjusting system resources and internal configurations. These methods can be applied during deployment or manually via configuration files and UI interfaces.

Method 1: Adjusting Pod Resources (CPU / Memory)

Concept

You can manage CPU and memory resources for the Kong Gateway Pod running in Kubernetes. By setting appropriate resource limits and requests, you help stabilize the gateway instance and ensure optimal performance.

Configuration Method

Set resource limits in either:

- Helm values.yaml

- Kubernetes Deployment YAML

resources:

limits:

cpu: "1000m" # Max 1 core

memory: "1024Mi" # Max 1GB

requests:

cpu: "500m" # Guaranteed 0.5 core

memory: "512Mi" # Guaranteed 512Mi

Location to Apply

- Helm-based deployment: add to values.yaml

- Manual deployment: apply under spec.template.spec.containers.resources in the Deployment file

Related UI

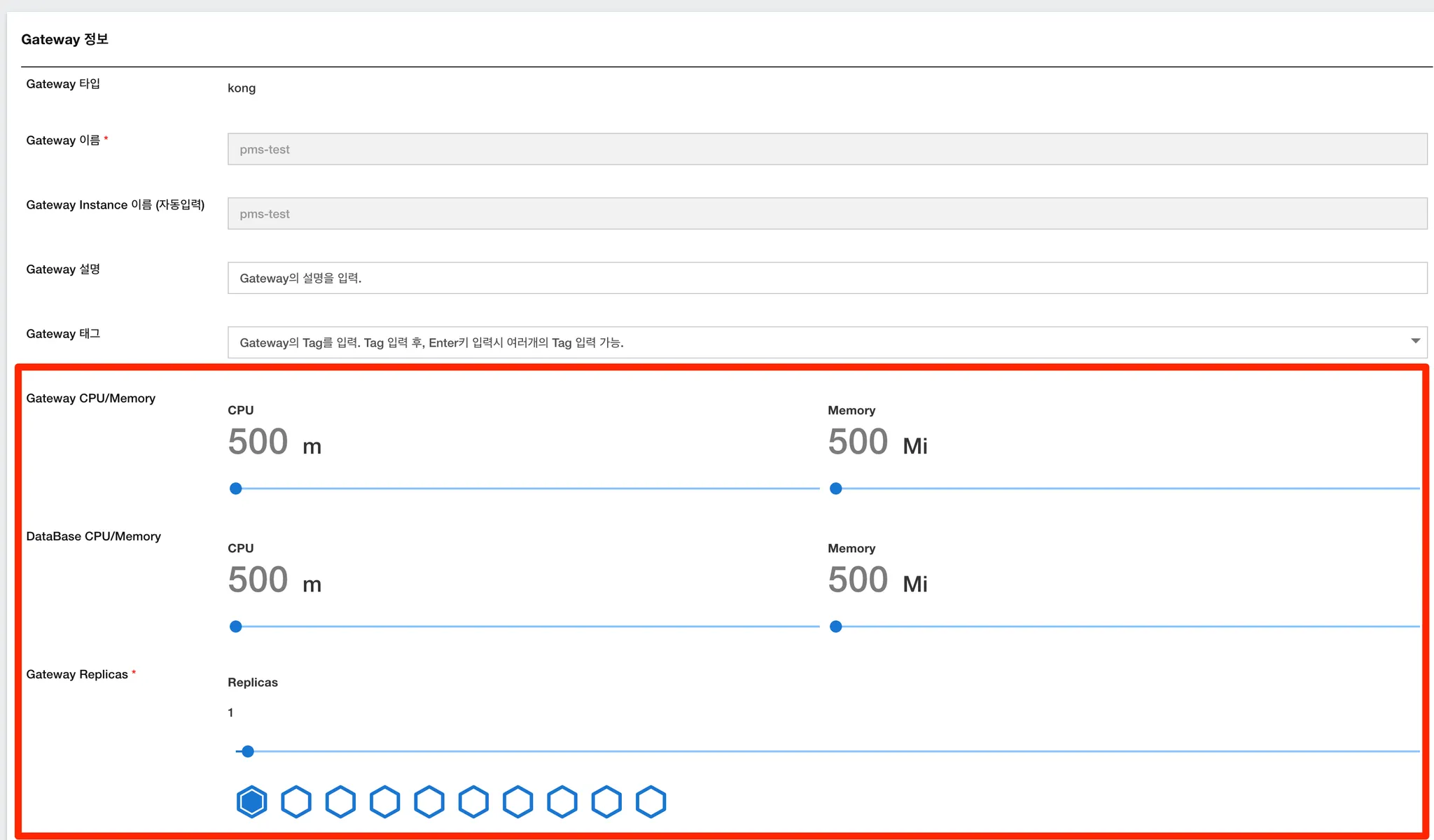

In the APIM console, navigate to the Gateway Management Page, where you can visually configure:

- Gateway CPU / Memory

- Database CPU / Memory

- Gateway Replica count

Method 2: Tuning Nginx Settings via Environment Variables

Concept

Kong internally uses Nginx, and several performance-related settings can be adjusted using environment variables or within the nginx.conf. These impact how the gateway handles client requests, buffers, and connection limits.

Configuration Method

Set values through:

- KONG_ environment variables*

- Helm values.yaml env section

env:

KONG_PROXY_ACCESS_LOG: "/dev/stdout"

KONG_ADMIN_ACCESS_LOG: "/dev/stdout"

KONG_CLIENT_BODY_BUFFER_SIZE: "16k"

KONG_CLIENT_MAX_BODY_SIZE: "128m"

KONG_LARGE_CLIENT_HEADER_BUFFERS: "4 16k"

KONG_NGINX_WORKER_PROCESSES: "2"

KONG_NGINX_WORKER_CONNECTIONS: "2048"

Equivalent in nginx.conf

client_body_buffer_size 16k;

client_max_body_size 128m;

large_client_header_buffers 4 16k;

worker_processes 2;

worker_connections 2048;

Most of these settings can be declared as environment variables in values.yaml. When deploying, they are automatically reflected in deployment.yaml.

Method 3: Tuning Database and Upstream-Handling Resources

Concept

When Kong uses PostgreSQL or runs in DB-less mode, the resource usage is affected by how many DB connections are allowed and how caching is configured.

Configuration Method

env:

KONG_DATABASE: "postgres"

KONG_PG_MAX_CONCURRENT_QUERIES: "100"

KONG_PG_MAX_CONNECTIONS: "200"

For Kong 3.x and above, caching can be further configured:

env:

KONG_DB_CACHE_TTL: 3600 # Cache TTL (seconds)

KONG_DB_CACHE_WARMUP_ENTITIES: "services,routes,plugins"

Parameter Descriptions

- KONG_PG_MAX_CONNECTIONS: Limits DB connections

- KONG_DB_CACHE_TTL: Time-to-live for cached data

- KONG_DB_CACHE_WARMUP_ENTITIES: Entities preloaded into cache at startup

These values can be managed directly in the Gateway Management Page of the APIM console.

Summary Table of Key Configuration Parameters

| Parameter | Description | Where to Set |

|---|---|---|

| resources.limits.cpu / memory | Maximum CPU and memory for the Pod | Deployment / values.yaml |

| resources.requests.cpu / memory | Guaranteed resource allocation | Deployment / values.yaml |

| KONG_CLIENT_MAX_BODY_SIZE | Maximum client body size | env / nginx.conf |

| KONG_NGINX_WORKER_PROCESSES | Number of Nginx workers | env / nginx.conf |

| KONG_PG_MAX_CONNECTIONS | DB connection limit | env |

| KONG_DB_CACHE_TTL | Time-to-live for DB cache | env |

| KONG_DB_CACHE_WARMUP_ENTITIES | Preloaded cache entities | env |