Architectures

APIM 在 DEV/STG/PRD 环境中的部署

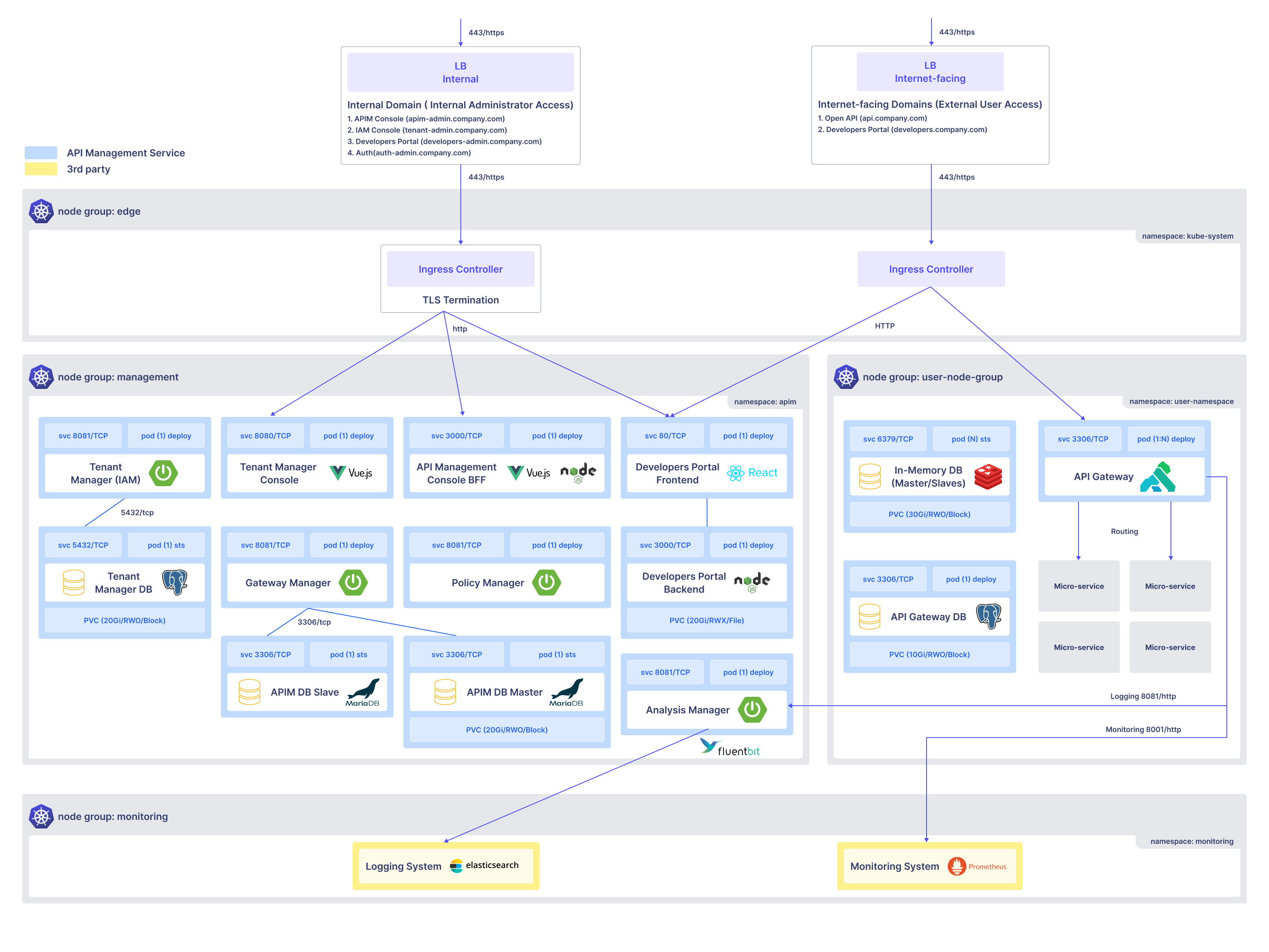

在此架构中,APIM 平台部署在 Kubernetes 集群上,并通过命名空间和角色进行分段。该系统设计为在多个环境中独立运行,例如开发、暂存和生产。

入口层和外部/内部路由

在最上层,系统利用两个负载均衡器 (LB):

内部 LB(管理员访问) - 提供访问:

- APIM 控制台 (apim-admin.company.com)

- IAM 控制台 (tenant-admin.company.com)

- 管理员开发者门户 (developers-admin.company.com)

- 认证控制台 (auth-admin.company.com)

面向互联网的 LB(用户访问) - 提供外部访问:

- 开放 API 服务 (api.company.com)

- 公共开发者门户 (developers.company.com)

流量通过集中式入口控制器进行路由,在此进行 TLS 终止,并根据域路径将流量通过 HTTP 转发到内部服务。

节点组:管理

该组托管管理租户、项目、网关、API 和策略所需的所有核心组件。

关键命名空间:

namespace: apim

核心组件:

| 组件 | 描述 |

|---|---|

| 租户管理器 (IAM) | 处理系统用户和租户组织的身份和访问管理 |

| 租户管理控制台 | 租户管理员的 UI 前端(使用 Vue.js 构建) |

| API 管理控制台 BFF | 协调 UI 和服务交互的前端后端(Vue.js 和 Node.js) |

| 网关管理器 | 控制网关的配置和与项目的关联 |

| 策略管理器 | 管理入站/出站策略定义,如 IP 过滤、身份验证、日志记录 |

| 开发者门户(前端和后端) | API 用户浏览和测试已发布 API 的接口 |

| 分析管理器 | 处理实时 API 使用分析和报告(与 FluentBit 连接) |

持久数据库:

- 租户管理器数据库 (PostgreSQL)

- APIM 数据库 主/从 (MariaDB)

- PVC 配置用于数据持久性和冗余。

节点组:用户节点组

该组执行运行时 API 流量并将用户 API 调用路由到后端微服务。

命名空间:

namespace: user-namespace

组件:

| 组件 | 描述 |

|---|---|

| API 网关 | 基于 Kong 的网关,处理入口 API 请求 |

| API 网关数据库 | 用于运行时网关配置和状态的 PostgreSQL 存储 |

| 内存数据库(主/从) | 用于令牌/会话存储(可能是 Redis 或类似的) |

| 微服务 | 接收路由 API 流量的实际后端服务 |

API 网关接收来自外部用户的请求并执行:

- 策略执行(身份验证、IP 过滤等)

- 路由到适当的微服务

- 通过入口返回响应

节点组:监控

| 组件 | 描述 |

|---|---|

| 日志系统 | 由 Elasticsearch 提供支持,用于收集结构化 API 日志 |

| 监控系统 | 由 Prometheus 提供支持,收集系统健康和警报的指标 |

日志和监控组件与 FluentBit 和 API 网关日志集成,使得:

- 实时 API 流量洞察

- 自定义指标可视化

系统通信流程

- 管理员通过入口控制器通过内部域访问系统。

- 用户通过外部域调用开放 API 和开发者门户,这些请求路由到 Kong 网关。

- Kong 强制执行 API 策略(入站/出站)并路由到相应的微服务。

- 所有组件的日志和指标被流式传输到监控和日志堆栈。

与云和第三方服务的集成 APIM 部署

该架构展示了 APIM 系统如何与外部基础设施(如 AWS)以及日志/监控服务(如 CloudWatch、Datadog)集成。

How It Works:- 私有 DNS 或公共 DNS(AWS Route53)用于将请求路由到 APIM 服务所在的 Kubernetes 集群内部。

- 一旦请求到达 Kong Gateway,入站策略将被执行(身份验证、头部注入等),然后流量被路由到后端服务。

- 响应通过出站策略(例如,数据掩码、日志记录)进行处理,并返回给客户端。

- 所有请求/响应日志和指标通过集成的第三方导出器转发到 CloudWatch、Datadog 或 Whatap。

- 基于 Swagger 的规范注册用于通过开发门户动态暴露或更新 API。

该架构支持跨组织边界的安全、可扩展和可观察的 API 管理。它确保 API 治理,同时允许无缝扩展到云原生服务。

Internal Deployment for Development Environment

此版本反映了仅供内部使用的 APIM 平台的开发环境设置。它强调在 API 测试或服务开发期间的安全性和封闭访问。

How It Works:- 所有流量在内部流动,通过私有 DNS 和 ALB 进入集群。

- 内部开发人员通过预定义的内部子域访问 APIM 控制台和开发者门户。

- 来自开发前端应用程序的 API 流量被发送到 Kong Gateway,在那里应用所有配置的策略。

- 后端微服务响应通过网关路由的请求。

- 整个堆栈按命名空间分隔,以便于维护和角色分离:

- apim 包含配置和控制逻辑。

- microservices 包含运行时服务和业务逻辑。

该架构允许安全的 API 开发和测试,而无需暴露于公共网络。它非常适合在分阶段或生产发布之前验证服务、应用策略和验证访问控制。

Dev-Only Internal Flow Model

该架构展示了开发环境中详细的内部流量流动,重点关注网络边界和隔离。

How It Works:- 内部应用程序和开发人员通过私有域和 NLB/ALB 路由与 APIM 控制台或开发者门户进行交互。

- 前端的请求被路由到 Kong 网关,在那里执行身份验证、速率限制和转换等运行时策略。

- 网关将请求路由到同一集群中托管的后端微服务。

- API 使用情况、日志和流量统计信息被发送到内部可观察性工具,如 Datadog,确保在开发操作期间的可见性。

- 在此环境中没有面向公众的访问点 - 所有组件,包括 API 网关,都是严格内部的。

此设置确保了一个安全、隔离的管道,用于开发和测试 API,同时保留完整的监控和治理能力。它允许开发团队模拟类似生产的 API 行为,而无需外部暴露。

组件描述和资源

该表概述了分配给 APIM 控制平面中每个组件的 CPU、内存和存储资源。它帮助基础设施和 DevOps 团队准确高效地规划和配置 Kubernetes 集群。

| Instance | Description | kind | Replicas | CPU (m) | CPU Total (m) | Memory (Mi) | Memory Total (Mi) | Storage (GB) | Storage Total (GB) |

|---|---|---|---|---|---|---|---|---|---|

| deploy-apim-analysis-manager | 分析管理器 | 部署 | 1 | 0.5 | 0.5 | 1024 | 1024 | 0 | 0 |

| deploy-apim-bff | APIM 控制台 BFF | 部署 | 1 | 0.5 | 0.5 | 512 | 512 | 0 | 0 |

| deploy-apim-gateway-manager | 网关管理器 | 部署 | 1 | 0.5 | 0.5 | 768 | 768 | 0 | 0 |

| deploy-apim-tenant-manager | 租户管理器 (IAM) | 部署 | 1 | 0.5 | 0.5 | 768 | 768 | 0 | 0 |

| deploy-apim-tenant-manager-console | 租户管理器控制台 | 部署 | 1 | 0.2 | 0.2 | 512 | 512 | 0 | 0 |

| deploy-apim-policy-manager | 策略管理器 | 部署 | 1 | 0.2 | 0.2 | 512 | 512 | 0 | 0 |

| deploy-apim-developer-portal-backend | 开发者门户后端 | 部署 | 1 | 0.2 | 0.2 | 512 | 512 | 20 | 20 |

| deploy-apim-developer-portal-frontend | 开发者门户前端 | 部署 | 1 | 0.2 | 0.2 | 64 | 64 | 0 | 0 |

| deploy-apim-mariadb-master | APIM 数据库 (MariaDB 主) | 有状态集 | 1 | 0.5 | 0.5 | 512 | 512 | 10 | 10 |

| deploy-apim-mariadb-slave | APIM 数据库 (MariaDB 从) | 有状态集 | 1 | 0.2 | 0.2 | 256 | 256 | 0 | 0 |

| statefulset-apim-tenant-manager-postgresql | IAM 数据库 (PostgreSQL) | 有状态集 | 1 | 0.5 | 0.5 | 256 | 256 | 10 | 10 |

| 总计 | 4 | 5760 | 40 |

- CPU: 4 核心

- 内存: 6 GiB

- 存储: 40 GB

Notes:

- CPU/内存根据副本扩展策略增加

- 日志/监控存储随流量规模变化

- 支持一个公共和一个私有的 APIM 部署